In today’s edition of Commissioner Candice McQueen’s Educator Update, she talks about pending legislation addressing teacher evaluation and TNReady.

Here’s what McQueen has to say about the issue:

As we continue to support students and educators in the transition to TNReady, the department has proposed legislation (HB 309) that lessens the impact of state test results on students’ grades and teachers’ evaluations this year.

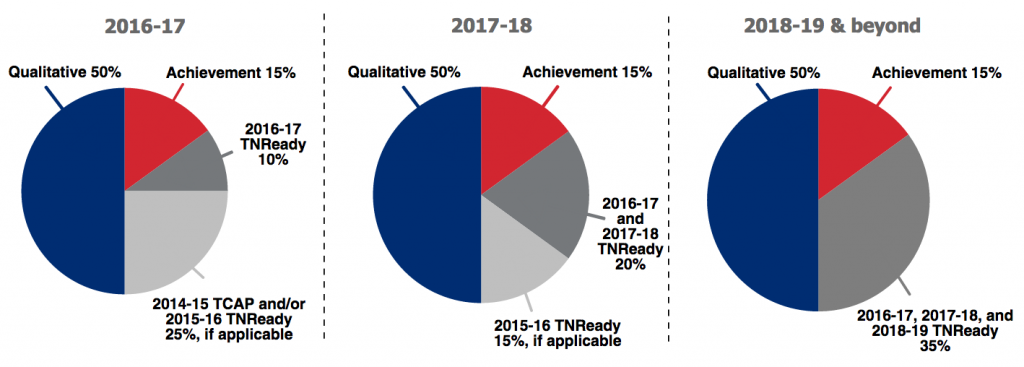

In 2015, the Tennessee Teaching Evaluation Enhancement Act created a phase-in of TNReady in evaluation to acknowledge the state’s move to a new assessment that is fully aligned to Tennessee state standards with new types of test questions. Under the current law, TNReady data would be weighted at 20 percent for the 2016-17 year.

However, in the spirit of the original bill, the department’s new legislation resets the phase-in of growth scores from TNReady assessments as was originally proposed in the Tennessee Teaching Evaluation Enhancement Act. Additionally, moving forward, the most recent year’s growth score will be used for a teacher’s entire growth component if such use results in a higher evaluation score for the teacher.

We will update you as this bill moves through the legislative process, and if signed into law, we will share detailed guidance that includes the specific options available for educators this year. As we announced last year, if a teacher’s 2015-16 individual growth data ever negatively impacts his or her overall evaluation, it will be excluded. Additionally, as noted above, teachers will be able to use 2016-17 growth data as 35 percent of their evaluation if it results in a higher overall level of effectiveness.

And here’s a handy graphic that describes the change:

Of course, there’s a problem with all of this: There’s not going to be valid data to use for TVAAS. Not this year. It’s bad enough that the state is transitioning from one type of test to another. That alone would call into question the validity of any comparison used to generate a value-added score. Now, there’s a gap in the data. As you might recall, there wasn’t a complete TNReady test last year. So, to generate a TVAAS score, the state will have to compare 2014-15 data from the old TCAP tests to 2016-17 data from what we hope is a sound administration of TNReady.

We really need at least three years of data from the new test to make anything approaching a valid comparison. Or, we should start over building a data-set with this year as the baseline. Better yet, we could go the way of Hawaii and Oklahoma and just scrap the use of value-added scores altogether.

Even in the best of scenarios — a smooth transition from TCAP to TNReady — data validity was going to be challenge.

As I noted when the issue of testing transition first came up:

Here’s what Lockwood and McCaffrey (2007) had to say in the Journal of Educational Measurement:

We find that the variation in estimated effects resulting from the different mathematics achievement measures is large relative to variation resulting from choices about model specification, and that the variation within teachers across achievement measures is larger than the variation across teachers. These results suggest that conclusions about individual teachers’ performance based on value-added models can be sensitive to the ways in which student achievement is measured.These findings align with similar findings by Martineau (2006) and Schmidt et al (2005)You get different results depending on the type of question you’re measuring.

The researchers tested various VAM models (including the type used in TVAAS) and found that teacher effect estimates changed significantly based on both what was being measured AND how it was measured.

And they concluded:

Our results provide a clear example that caution is needed when interpreting estimated teacher effects because there is the potential for teacher performance to depend on the skills that are measured by the achievement tests.

If you measure different skills, you get different results. That decreases (or eliminates) the reliability of those results. TNReady is measuring different skills in a different format than TCAP. It’s BOTH a different type of test AND a test on different standards. Any value-added comparison between the two tests is statistically suspect, at best. In the first year, such a comparison is invalid and unreliable.

So, we’re transitioning from TCAP to TNReady AND we have a gap in years of data. That’s especially problematic — but, not problematic enough to keep the Department of Education from plowing ahead (and patting themselves on the back) with a scheme that validates a result sure to be invalid.

For more on education politics and policy in Tennessee, follow @TNEdReport

Pingback: Tennessee Education Report | Shelby County Passes TNReady Resolution

Pingback: Tennessee Education Report | The NeverEnding Story

Pingback: Court Rules Against Flawed VAM Model | Spears Strategy