From a story in Chalkbeat:

Tennessee’s teacher evaluation system is more accurate than ever in measuring teacher quality…

That’s the conclusion drawn from a report on the state’s teacher evaluation system conducted by the State Department of Education.

The idea is that the system is improving.

Here’s the evidence the report uses to justify the claim of an improving evaluation system:

1) Teacher observation scores now more closely align with teacher TVAAS scores — TVAAS is the value-added modeling system used to determine a teacher’s impact on student growth

2) More teachers in untested subjects are now being evaluated using the portfolio system rather than TVAAS data from students they never taught

On the second item, I’d note that previously, 3 districts were using the a portfolio model and now 11 districts use it. This model allows related-arts teachers and those in other untested subjects to present a portfolio of student work to demonstrate that teacher’s impact on growth. The model is generally applauded by teachers who have a chance to use it.

However, there are 141 districts in Tennessee and 11 use this model. Part of the reason is the time it takes to assess portfolios well and another reason is the cost associated with having trained evaluators assess the portfolios. Since the state has not (yet) provided funding for the use of portfolios, it’s no surprise more districts haven’t adopted the model. If the state wants the evaluation model to really improve (and thereby improve teaching practice), they should support districts in their efforts to provide meaningful evaluation to teachers.

A portfolio system could work well for all teachers, by the way. The state could move to a system of project-based learning and thus provide a rich source of material for both evaluating student mastery of concepts AND teacher ability to impact student learning.

On to the issue of TVAAS and observation alignment. Here’s what the report noted:

Among the findings, state education leaders are touting the higher correlation between a teacher’s value-added score (TVAAS), which estimates how much teachers contribute to students’ growth on statewide assessments, and observation scores conducted primarily by administrators.

First, the purpose of using multiple measures of teacher performance is not to find perfect alignment, or even strong correlation, but to utilize multiple inputs to assess performance. Pushing for alignment suggests that the department is actually looking for a way to make TVAAS the central input driving teacher evaluation.

Advocates of this approach will tell suggest that student growth can be determined accurately by TVAAS and that TVAAS is a reliable predictor of teacher performance.

I would suggest that TVAAS, like most value-added models, is not a significant differentiator of teacher performance. I’ve written before about the need for caution when using value-added data to evaluate teachers.

More recently, I wrote about the problems inherent in attempting to assign growth scores when shifting to a new testing regime, as Tennessee will do next year when it moves from TCAP to TNReady. In short, it’s not possible to assign valid growth scores when comparing two entirely different tests. Researchers at RAND noted:

We find that the variation in estimated effects resulting from the different mathematics achievement measures is large relative to variation resulting from choices about model specification, and that the variation within teachers across achievement measures is larger than the variation across teachers. These results suggest that conclusions about individual teachers’ performance based on value-added models can be sensitive to the ways in which student achievement is measured.

These findings align with similar findings by Martineau (2006) and Schmidt et al (2005)

You get different results depending on the type of question you’re measuring.

The researchers tested various VAM models (including the type used in TVAAS) and found that teacher effect estimates changed significantly based on both what was being measured AND how it was measured.

And they concluded:

Our results provide a clear example that caution is needed when interpreting estimated teacher effects because there is the potential for teacher performance to depend on the skills that are measured by the achievement tests.

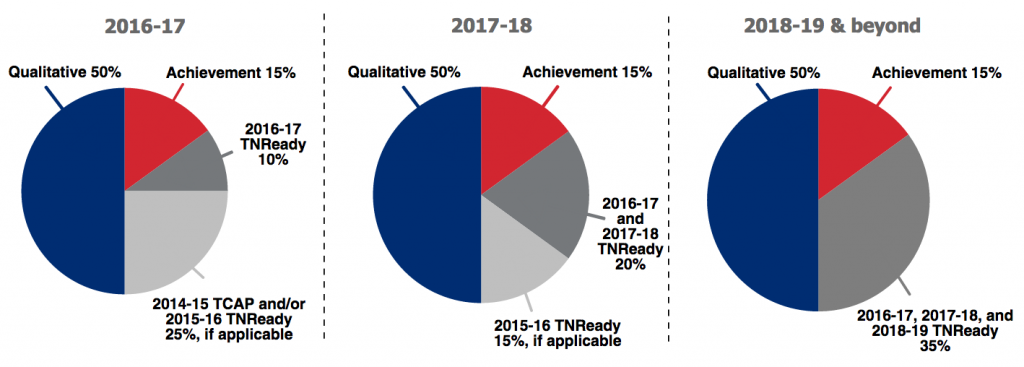

So, even if you buy the idea that TVAAS is a significant differentiator of teacher performance, drawing meaningful conclusions from next year’s TNReady simply is not reliable.

The state is touting improvement in a flawed system that may now be a little less bad. And because they insist on estimating growth from two different tests with differing methodologies, the growth estimates in 2016 will be unreliable at best. If they wanted to improve the system, they would take two to three years to build growth data based on TNReady — that would mean two t0 three years of NO TVAAS data in teacher evaluation.

Alternatively, the state could move to a system of project-based learning and teacher evaluation and professional development based on a Peer Assistance and Review Model. Such an approach would be both student-centered and result in giving teachers the professional respect they deserve. It also carries a price tag — but our students are worth doing the work of both reallocating existing education dollars and finding new ways to invest in our schools.

For more on education politics and policy in Tennessee, follow @TNEdReport